Apache HTTP Server Version 2.4

Apache HTTP Server Version 2.4

Available Languages: en

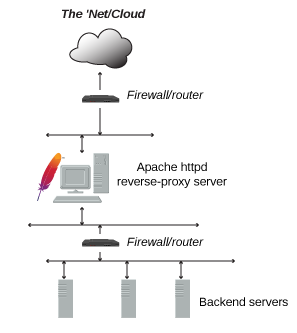

In addition to being a "basic" web server, and providing static and dynamic content to end-users, Apache httpd (as well as most other web servers) can also act as a reverse proxy server, also-known-as a "gateway" server.

In such scenarios, httpd itself does not generate or host the data, but rather the content is obtained by one or several backend servers, which normally have no direct connection to the external network. As httpd receives a request from a client, the request itself is proxied to one of these backend servers, which then handles the request, generates the content and then sends this content back to httpd, which then generates the actual HTTP response back to the client.

There are numerous reasons for such an implementation, but generally the typical rationales are due to security, high-availability, load-balancing and centralized authentication/authorization. It is critical in these implementations that the layout, design and architecture of the backend infrastructure (those servers which actually handle the requests) are insulated and protected from the outside; as far as the client is concerned, the reverse proxy server is the sole source of all content.

A typical implementation is below:

Reverse Proxy

Reverse Proxy Simple reverse proxying

Simple reverse proxying Clusters and Balancers

Clusters and Balancers Balancer and BalancerMember configuration

Balancer and BalancerMember configuration Failover

Failover Balancer Manager

Balancer Manager Dynamic Health Checks

Dynamic Health Checks BalancerMember status flags

BalancerMember status flags| Related Modules | Related Directives |

|---|---|

The ProxyPass

directive specifies the mapping of incoming requests to the backend

server (or a cluster of servers known as a Balancer

group). The simpliest example proxies all requests ("/")

to a single backend:

ProxyPass "/" "http://www.example.com/"

To ensure that and Location: headers generated from

the backend are modified to point to the reverse proxy, instead of

back to itself, the ProxyPassReverse

directive is most often required:

ProxyPass "/" "http://www.example.com/" ProxyPassReverse "/" "http://www.example.com/"

Only specific URIs can be proxied, as shown in this example:

ProxyPass "/images" "http://www.example.com/" ProxyPassReverse "/images" "http://www.example.com/"

In the above, any requests which start with the /images

path with be proxied to the specified backend, otherwise it will be handled

locally.

As useful as the above is, it still has the deficiencies that should

the (single) backend node go down, or become heavily loaded, that proxying

those requests provides no real advantage. What is needed is the ability

to define a set or group of backend servers which can handle such

requests and for the reverse proxy to load balance and failover among

them. This group is sometimes called a cluster but Apache httpd's

term is a balancer. One defines a balancer by leveraging the

<Proxy> and

BalancerMember directives as

shown:

<Proxy balancer://myset>

BalancerMember http://www2.example.com:8080

BalancerMember http://www3.example.com:8080

ProxySet lbmethod=bytraffic

</Proxy>

ProxyPass "/images/" "balancer://myset/"

ProxyPassReverse "/images/" "balancer://myset/"

The balancer:// scheme is what tells httpd that we are creating

a balancer set, with the name myset. It includes 2 backend servers,

which httpd calls BalancerMembers. In this case, any requests for

/images will be proxied to one of the 2 backends.

The ProxySet directive

specifies that the myset Balancer use a load balancing algorithm

that balances based on I/O bytes.

BalancerMembers are also sometimes referred to as workers.

You can adjust numerous configuration details of the balancers

and the workers via the various parameters defined in

ProxyPass. For example,

assuming we would want http://www3.example.com:8080 to

handle 3x the traffic with a timeout of 1 second, we would adjust the

configuration as follows:

<Proxy balancer://myset>

BalancerMember http://www2.example.com:8080

BalancerMember http://www3.example.com:8080 loadfactor=3 timeout=1

ProxySet lbmethod=bytraffic

</Proxy>

ProxyPass "/images" "balancer://myset/"

ProxyPassReverse "/images" "balancer://myset/"

You can also fine-tune various failover scenarios, detailing which workers and even which balancers should be accessed in such cases. For example, the below setup implements three failover cases:

http://spare1.example.com:8080 and

http://spare2.example.com:8080 are only sent traffic if one

or both of http://www2.example.com:8080 or

http://www3.example.com:8080 is unavailable. (One spare

will be used to replace one unusable member of the same balancer set.)

http://hstandby.example.com:8080 is only sent traffic if

all other workers in balancer set 0 are not available.

0 workers, spares, and the standby

are unavailable, only then will the

http://bkup1.example.com:8080 and

http://bkup2.example.com:8080 workers from balancer set

1 be brought into rotation.

Thus, it is possible to have one or more hot spares and hot standbys for each load balancer set.

<Proxy balancer://myset>

BalancerMember http://www2.example.com:8080

BalancerMember http://www3.example.com:8080 loadfactor=3 timeout=1

BalancerMember http://spare1.example.com:8080 status=+R

BalancerMember http://spare2.example.com:8080 status=+R

BalancerMember http://hstandby.example.com:8080 status=+H

BalancerMember http://bkup1.example.com:8080 lbset=1

BalancerMember http://bkup2.example.com:8080 lbset=1

ProxySet lbmethod=byrequests

</Proxy>

ProxyPass "/images/" "balancer://myset/"

ProxyPassReverse "/images/" "balancer://myset/"

For failover, hot spares are used as replacements for unusable workers in the same load balancer set. A worker is considered unusable if it is draining, stopped, or otherwise in an error/failed state. Hot standbys are used if all workers and spares in the load balancer set are unavailable. Load balancer sets (with their respective hot spares and standbys) are always tried in order from lowest to highest.

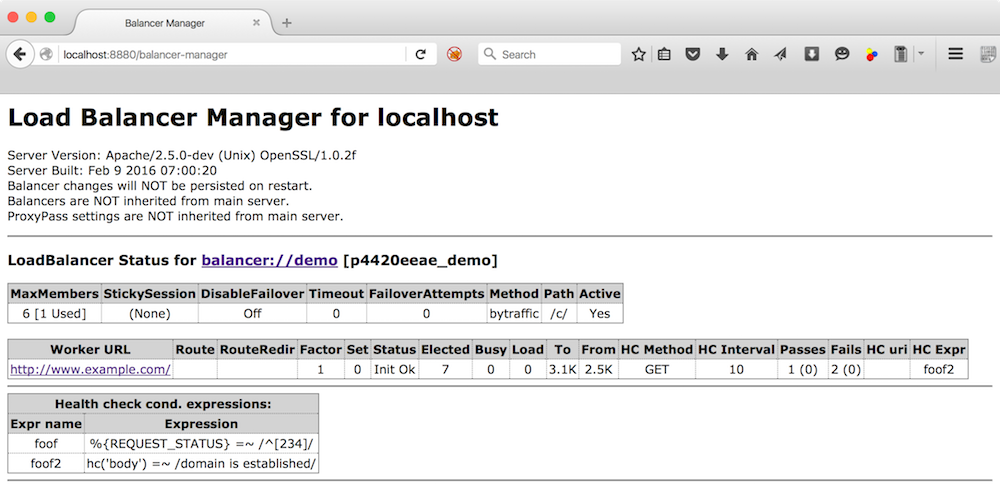

One of the most unique and useful features of Apache httpd's reverse proxy is

the embedded balancer-manager application. Similar to

mod_status, balancer-manager displays

the current working configuration and status of the enabled

balancers and workers currently in use. However, not only does it

display these parameters, it also allows for dynamic, runtime, on-the-fly

reconfiguration of almost all of them, including adding new BalancerMembers

(workers) to an existing balancer. To enable these capability, the following

needs to be added to your configuration:

<Location "/balancer-manager">

SetHandler balancer-manager

Require host localhost

</Location>

Do not enable the balancer-manager until you have secured your server. In particular, ensure that access to the URL is tightly restricted.

When the reverse proxy server is accessed at that url

(eg: http://rproxy.example.com/balancer-manager/, you will see a

page similar to the below:

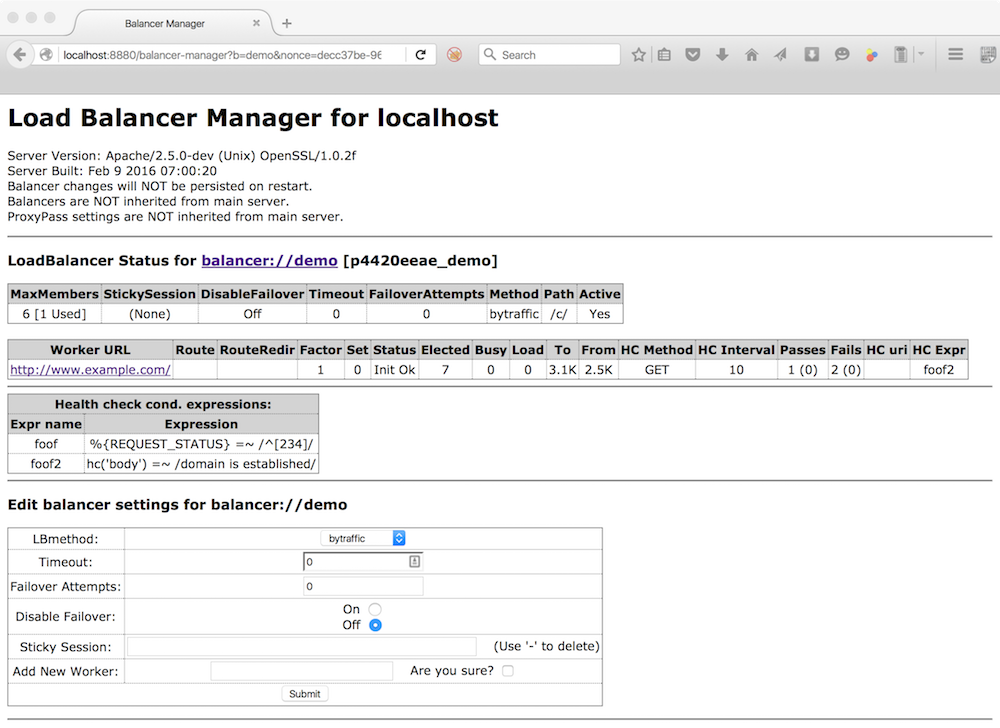

This form allows the devops admin to adjust various parameters, take workers offline, change load balancing methods and add new works. For example, clicking on the balancer itself, you will get the following page:

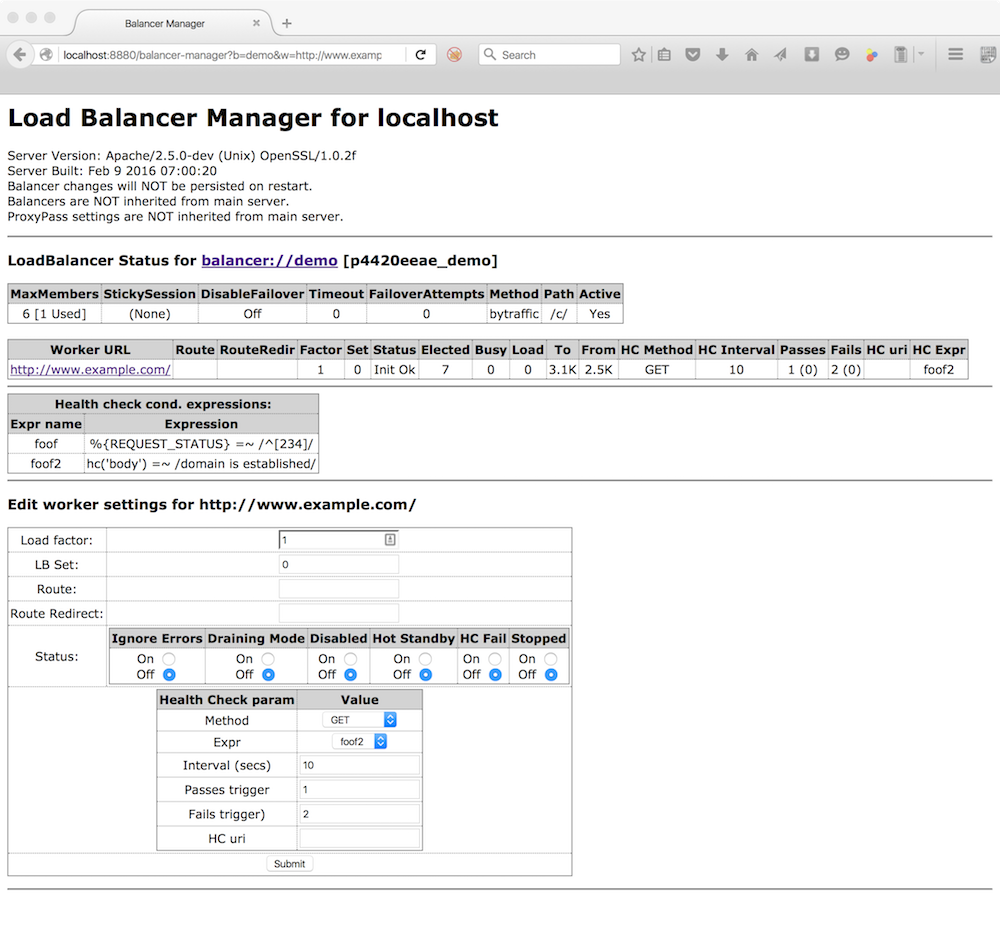

Whereas clicking on a worker, displays this page:

To have these changes persist restarts of the reverse proxy, ensure that

BalancerPersist is enabled.

Before httpd proxies a request to a worker, it can "test" if that worker

is available via setting the ping parameter for that worker using

ProxyPass. Oftentimes it is

more useful to check the health of the workers out of band, in a

dynamic fashion. This is achieved in Apache httpd by the

mod_proxy_hcheck module.

In the balancer-manager the current state, or status, of a worker is displayed and can be set/reset. The meanings of these statuses are as follows:

| Flag | String | Description |

|---|---|---|

| Ok | Worker is available | |

| Init | Worker has been initialized | |

D | Dis | Worker is disabled and will not accept any requests; will be automatically retried. |

S | Stop | Worker is administratively stopped; will not accept requests and will not be automatically retried |

I | Ign | Worker is in ignore-errors mode and will always be considered available. |

R | Spar | Worker is a hot spare. For each worker in a given lbset that is unusable (draining, stopped, in error, etc.), a usable hot spare with the same lbset will be used in its place. Hot spares can help ensure that a specific number of workers are always available for use by a balancer. |

H | Stby | Worker is in hot-standby mode and will only be used if no other viable workers or spares are available in the balancer set. |

E | Err | Worker is in an error state, usually due to failing pre-request check;

requests will not be proxied to this worker, but it will be retried depending on

the retry setting of the worker. |

N | Drn | Worker is in drain mode and will only accept existing sticky sessions destined for itself and ignore all other requests. |

C | HcFl | Worker has failed dynamic health check and will not be used until it passes subsequent health checks. |

Available Languages: en